OpenGL 1.2 => 3.3 Hmm

The fun thing about VtV for me is:

- No pressure; I'm already happy to have just gotten it out there

- Kind of a crazy canvas to mess around with

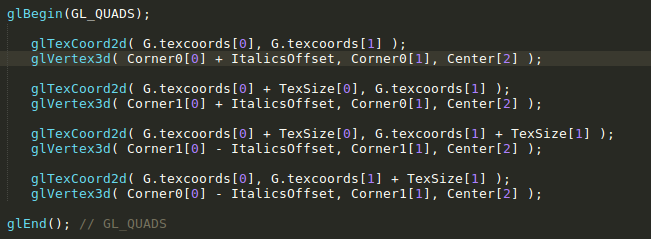

There are a lot of things I can do to mess around with VtV but they all sort of depend on updating the render engine. It's written in OpenGL 1.2 direct mode (with rather heavy use of display lists) which looks like this:

First thing you should know: this is actually super cool and convenient. It's called OpenGL immediate mode. OpenGL is sitting there, essentially a state machine, and you send it commands: "Begin drawing quads. For the next point you draw, use this texture coordinate. The first point on the quad is HERE. For the next point you draw, use THIS texture coordinate (a bit different). And the second point goes HERE." and so on for four points! And it draws that on the screen. When people talk about how hard it is to get a "red triangle to draw on the screen using OpenGL" they very much do NOT mean OpenGL immediate mode, which always was incredibly fast to work with (and still is-- I could definitely prototype interesting things a lot faster with this if I had something like it, today.)

Second thing you should know: this works OVER THE NETWORK! It's cool-- all these OpenGL immediate mode commands can be packed into some kind of binary stream, they are all just command with fixed parameters, and jammed over a wire. Imagine a world where you could log into a server and it draws your user interface, fonts and all, but without copying pixels! (Note: X11, the Linux windowing system, used to work something like this, too-- I don't think it used OpenGL directly) This feature was maybe used in some context, but I'm not aware of any. It's basically a super cool idea and immediate mode was sort of designed with that in mind, but... as far as I know nothing really came of it. I think it's still a really valid and interesting idea.

Third thing you should know: this is FASTER THAN YOU THINK. It really is. And there are tricks to make it faster, in particular Display Lists. These let basically bundle a bunch of these commands into a set, upload them into video ram (incredibly fast for the graphics card to access) and then just say, "execute that bunch of commands, now."

Fourth thing you should know: nobody does this anymore. Sadface.

But why? Not without good reason; there are two, really:

Every command has sort of a lot of overhead. But more than that, a collection of commands is non-uniform; a 3D model with 1000 vertices will not consists of 1000 glVertex3d calls, instead it will have 1000 glVertex3d calls mixed in with a bunch of other things. Because the data being stored isn't uniform, there are some limitations to what you can do with it. If you want to change one point inside, you have to re-do the whole list of commands. Likewise, you can't somehow transform every vertex inside a list of these commands, without executing them over again. But if you just had a list of 1000 3D vertices (say float [3] or whatever), well you can do a lot of things. And a GPU, if it's given the right constraints on reading/writing memory, can do a lot of things AT ONCE.

The commands kind of define what is possible. Suffice it to say you might want to do something really interesting with vertices-- maybe they exist in 9 dimensions, and those dimension can be reduced over some parameter (call it T) to produce 3 spatial dimensions. This may sound exotic but that's exactly how an efficient particle system might be implemented. But what do we do? Add a glVertex9d call? What if we want 11 dimensions? Again, expanding this kind of command set is sort of a difficult proposition.

Through practical experimentation it was soon determined that the best thing to do was have uniform data, and let the GPU process it in parallel. The "process it in parallel" is the place for all the creativity! Instead of having the "get creative" part of writing a rendering engine be mixed in with the data, what you do (now) is define the data separately (and in a very flexible way) and then write some small programs that operate all at once on that data. Voila! You still have freedom of creativity, your only constraint is now you have to design it in a way that respects what the GPU needs in order to allow your programs to run in parallel. And so was born vertex and pixel (now fragment) shaders.

As fast as immediate mode actually is/was, separating data this way basically elevates GPUs into the stratosphere in terms of their ability. This is a side note, but if your top of the line intel i7 CPU costing thousnads of dollars with 8 cores or whatever is put next to a modern GPU costing 1/10th that, and you have the data organized correctly for the GPU to process it, the GPU is not like 2 or 10 or 100 times faster. It's like literally a million times faster. Writing code for a GPU when you are used to writing code for a CPU feels basically like stepping into an alternate dimension.

So this brings us to my problem. Although I love immediate mode, and actually did toy with the idea of sticking with OpenGL 1.2 while I "messed around" with VtV, I think the more satisfying thing to do will be move to a modern rendering framework like OpenGL 3.3 (what I'm using for my other projects) provides.

The problem is, the two cannot really coexist.

Writing code for OpenGL 3.3, at first, is fairly painstaking. (At best, it's still a lot harder than OpenGL 1.2 immediate mode.) So let's suppose I want to convert this project to OpenGL 3.3. Then I have to take all the rendering code and convert it at once, before I can even see a single pixel drawn on the screen! I can't just, say, take the part that renders lightning or spaceships and update that, because then part of the code will be 1.2 and part will be 3.3, and actually I have no idea what will happen then other than GL will probably complain that one or the other is invalid for the current context.

By the time I get to converting everything over, everything will be broken, and I will have to fix things one at a time. Most likely after doing this NOTHING would even correctly draw to the screen.

This is not going to be fun. I really would like to be having PURE FUN with this, and ONLY FUN. It's just my agenda here, sorry.

What IS fun is cleaning up your room! Bit by bit underneath that messy pile of socks and garbadge a clean floor and organized shelves emerge. This type of programming is fun, too! Take a big messy project and piece by piece shuffle it into a coherent, lovely whole. I've already done a bit of that on VtV. I'd like to continue that approach! I want to fix rendering for ONE part at a time, and gradually have the thing become nicer.

You'll remember the main creative difference between immediate mode and modern rendering is that modern rendering you sort the data out FIRST. So perhaps I can tackle it that way-- is there a way to sort the data and the "creative" code into two piles, first, in OpenGL 1.2? From there I'll already have my two piles, and I can (hopefully) then make one more step of changing the format of the piles. By then I'll already know the logic behind how I have separated things out is working, so making the final leap will be much more managable.

The answer to this question is I don't really know, but I *think* I can if I use OpenGL 2.0 display lists and shaders. These are sort of half way between immediate mode and modern rendering. A bit of gory details here but the main difference between GL 2.0 and Gl 3.x is that in 2.0 there is still the concept of a "fixed pipeline". All this really means is that OpenGL 2.0 expects you to structure things a bit more narrowly than you are allowed to in 3.3. But the advantage is that it means that things can still coexist with immediate mode-- I think.

The reason immediate mode cannot coexist in 3.3 is that a lof of the concepts implied by immediate mode are now fully programmable in 3.3. So for instance in OpenGL 1.2 and 2.0 you have the concept of a "modelview matrix"; this sort of helps define the position, rotation, and so on of the thing you are drawing. In GL 3.x you no longer have this as a fixed concept-- it's up to you if you want to do it. (This is a big part of why people complain that it's such a big leap to get a single triangle drawing in 3.x-- in a sense, the framework is so flexible that before you can get anywhere you have a lot of work to do.)

So anyway, that will be my first approach. I already know 3.3 very well, but 2.0 is completely unknown to me. However, I will construct the code for 2.0 in a way that will be easy to convert to 3.3 when the time comes. The goal will be just to get all the code converted to vertex arrays + simple shaders without really changing how anything looks (much.) Once that's done, I'll pivot everything at once to 3.3, and at that point, I will have a lot more creative freedom.

Anyway enjoy the game!!!

Get Venture the Void

Venture the Void

May you find yourself lost and amused

| Status | In development |

| Author | Kitty Lambda Games |

| Genre | Adventure |

More posts

- 1.5.5 - Huge invisible code refactoringSep 08, 2021

- 1.5.4 - New World File "Sofu" + some fixesAug 18, 2020

- 1.5.3 - World file "Sof"May 07, 2020

- 1.5.2 - World file "Shellie" + some fixesApr 07, 2020

- 1.5.1 - New World File "Tenebrae"Feb 29, 2020

- Venture the Void 1.5.0 - How to keep your saved gamesFeb 01, 2020

- 1.4.4 Fixes, Flying Into Outer Space TutorialMay 04, 2019

- Tutorial Vid: Flying Around PlanetsApr 29, 2019

- Fixed: Vaccines, Dialog Traps, Aspect RatioApr 25, 2019

- CommLink bug fixedApr 20, 2019

Leave a comment

Log in with itch.io to leave a comment.